ChatGPT Health sounds promising — but I don’t trust it with my medical data just yet

Convenience isn’t worth giving up control over my most sensitive data

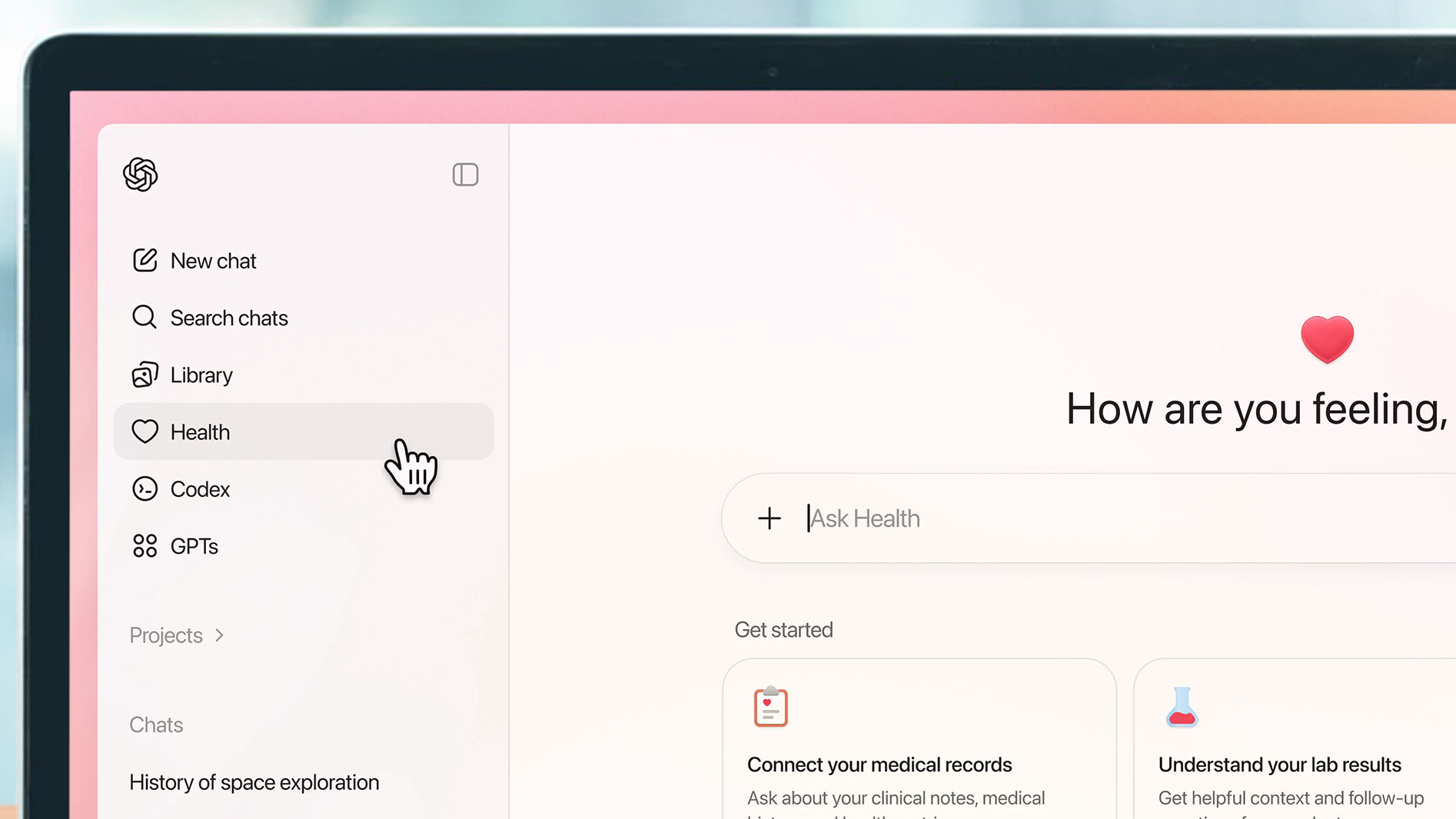

OpenAI promised new ways to support your medical care with AI when launching ChatGPT Health this month. The AI chatbot's newest feature focuses on health in a dedicated virtual clinic capable of processing your electronic medical records (EMRs) and data from fitness and health apps for personalized responses about lab results, diet, exercise, and preparation for doctor visits.

With more than 230 million people asking health and wellness questions every week, ChatGPT Health was inevitable. But despite OpenAI's hyping of ChatGPT Health's value and its assurance of extra security and privacy, I won’t be signing up any time soon.

The central issue is trust. As impressed as I've been with ChatGPT and most of its features, I remain skeptical that any tool capable of casually hallucinating nonsense should be relied upon for anything but the most basic of health questions.

And even if I do trust ChatGPT as a personal health advisor, I don't want to give it my actual medical data. I've already shared more than a few details of my life with ChatGPT while testing its abilities and occasionally felt uneasy about doing so. I feel far less sanguine about adding my EMRs to the list. I instinctively recoil from the idea of giving a company with a profit motive and an imperfect history of data security access to my most sensitive health information.

Healthy distrust

I can see why ChatGPT Health might entice people, even with all its caveats about what it can and can't do for you. Healthcare systems are complicated, often overstretched, and can be financially draining. ChatGPT Health can provide clearer explanations of medical jargon, highlight things worth discussing with a doctor or nurse before an appointment, and immediately parse confusing test results.

ChatGPT Health entices with its ability to deliver insights that were previously only available through professional channels. It's an easy sell, especially if you lack easy access to regular medical care. But valuable, personal healthcare information and AI’s track record for hallucination make for an unhealthy brew.

Misleading or fictional answers are bad enough even without the need for medical nuance. It's not that I'm against asking ChatGPT questions about my health or fitness, but that's a far cry from what ChatGPT Health suggests sharing.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

“Exploring an isolated health question is fundamentally different from giving a platform access to your full medical record and lifetime health history," explained Alexander Tsiaras, founder and CEO of medical data platform StoryMD. "Trust will be the central challenge, not just for individuals but for the healthcare system as a whole. That trust depends on transparency: how data is ingested, how hallucinations are prevented, how longitudinal clinical evidence is incorporated over time, and whether the platform operates within established regulatory frameworks, including HIPAA."

OpenAI is not a healthcare provider. HIPAA (Health Insurance Portability and Accountability Act) and its strict legal obligations and safeguards for your medical information don't apply. So, while OpenAI might sincerely promise to treat any health data you freely upload or connect to ChatGPT Health with matching care and security, it's unclear if they have the same legal motivation to do so.

And when data is outside HIPAA’s reach, you have to trust a company’s own policies and intentions without relying on HIPAA's enforceable standards. Some people might argue that OpenAI’s own privacy commitments are sufficient (lawyers at OpenAI, for instance).

Health data control

Most people would agree that intimate medical information should be locked down under the tightest legal protections possible. But ChatGPT Health users will be in a precarious position, especially because policy language and terms of service can change with little notice and limited recourse.

ChatGPT Health's isolating of health conversations and allowing users to delete their data are good ideas, but once your data is out of the traditional healthcare system, you have to consider a whole different set of vulnerabilities. Headlines underscoring how difficult it is for major tech platforms to guarantee airtight data protection against leaks and hacks are far too frequent.

And there's more risk to your data, even from legitimate agencies. Private health data could be subject to subpoenas, legal discovery, or other forms of compelled disclosure. In some cases, companies can be forced to hand over private records to satisfy court orders or government requests. HIPAA has much stronger defenses against such legal pressures than the standard consumer tech privacy laws under which ChatGPT operates.

Affordable, accessible healthcare is a cause worth pursuing. But privacy, trust, and meaningful human oversight cannot be casualties in that pursuit. People are already concerned about how their data is collected and used by AI platforms. Centralizing even more sensitive information with a single commercial entity won't reduce those concerns.

There’s a bigger conversation to be had about incorporating AI into systems that human beings depend on. AI can be a huge boon for providing healthcare. But it won't be predicated on surrendering personal data to uncertain security and safety.

"These are foundational requirements," Tsiaras said. "What people actually need is a clinically sound, longitudinal medical record that supports meaningful patient and patient-provider interaction, not another layer of noise.”

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

➡️ Read our full guide to the best business laptops

1. Best overall:

Dell Precision 5690

2. Best on a budget:

Acer Aspire 5

3. Best MacBook:

Apple MacBook Pro 14-inch (M4)

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.